Executive:

In today’s digital-first world, even the most beloved restaurants need a robust online presence. Pool Engineering known for its warm swimming pool experience, recognized the need to scale its digital operations to meet growing customer expectations. With the help of Cogniv Technologies and AWS, they embarked on a journey to modernize their infrastructure, streamline deployments, and enhance reliability.

The Challenge: Building a Digital Foundation

Pool Engineering team faced several hurdles at the start of their digital journey:

- Uncertainty around hosting platforms

- Scalability limitations and potential downtime

- Lack of automated deployment and monitoring tools

- Security vulnerabilities due to manual configurations

These challenges threatened to delay their online launch and compromise user experience during peak hours.

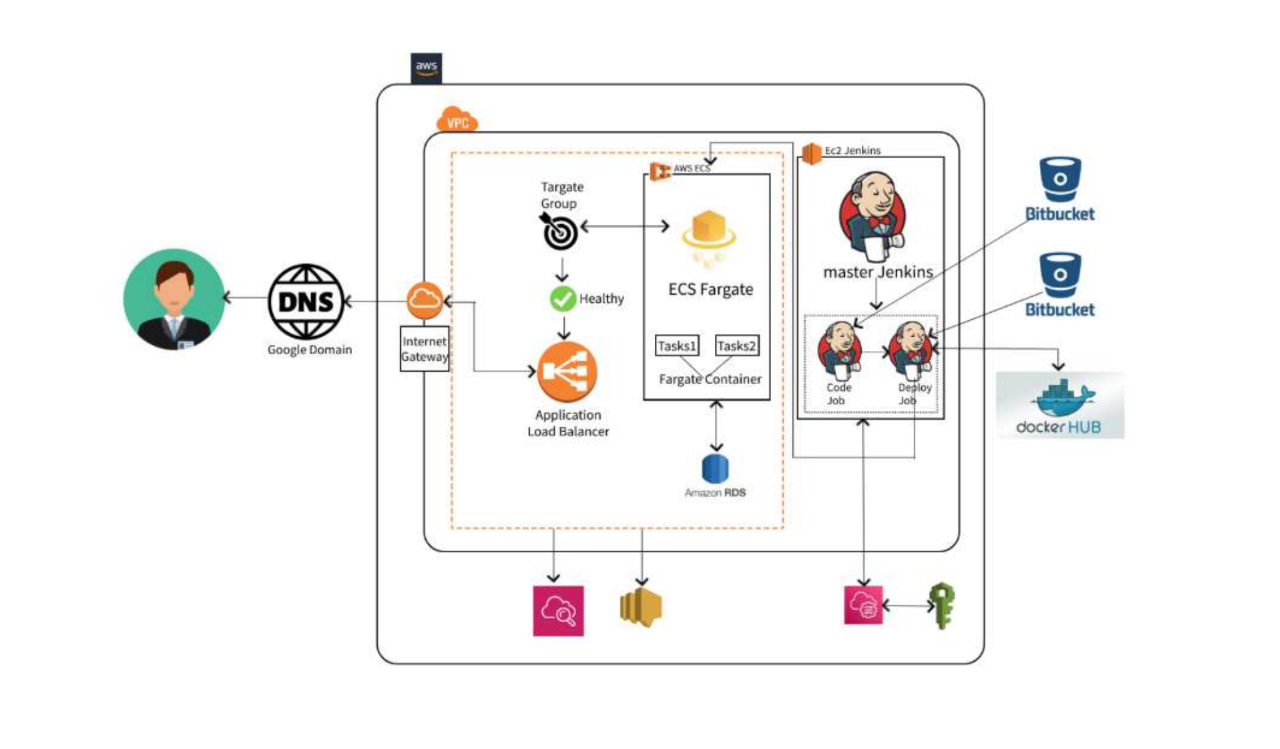

The Solution: AWS Elastic Container Service + CI/CD Automation

To overcome these obstacles, Cogniv Technologies implemented a fully automated, cloud native solution using AWS Elastic Container Service and a CI/CD pipeline

How the Partner Resolved the Customer Challenge

Designed a Fully Automated 3-Tier Architecture

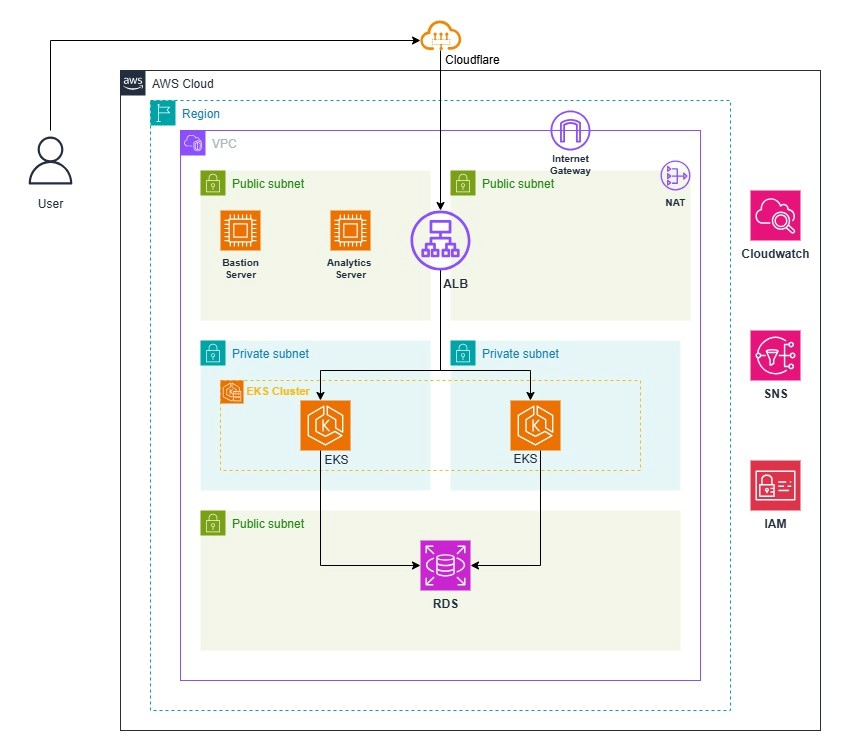

Utilized AWS CloudFormation to provision infrastructure as code. Included a secure VPC, application layer (Elastic Container Service), and database layer (Amazon RDS).

Implemented Secure Networking:

Deployed resources within a custom VPC for isolated and secure network access.Placed the RDS database in private subnets to protect sensitive data.

Deployed Scalable Application Hosting:

Used AWS Elastic Container Service to host the Dockerized application. Enabled automatic scaling, load balancing, and health monitoring.

Integrated GitHub for Source Control:

Enabled seamless version control and collaboration. Ensured traceability and transparency in code change

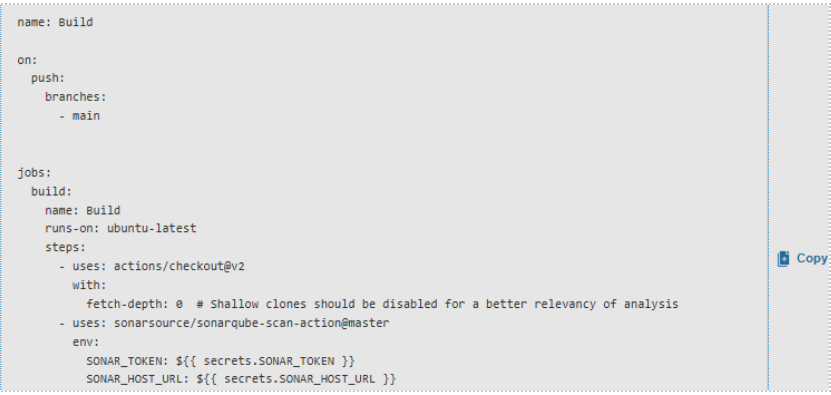

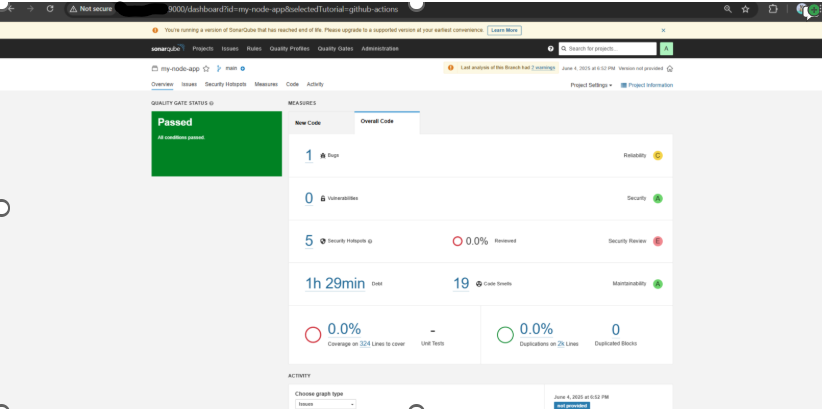

Established CI/CD Pipeline:

Configured AWS CodePipeline and CodeBuild for automated builds and deployments. Enabled continuous integration and delivery with minimal manual intervention.

Streamlined Deployment Workflow:

Automated the entire release process from code commit to production deployment. Reduced downtime and manual errors, ensuring consistent and reliable updates.

Enhanced Operational Efficiency:

Eliminated manual overhead, allowing the team to focus on innovation. Improved deployment speed and system reliability.

DevOps in Action: Practices That Powered the Transformation

Version Control: GitHub integration enabled seamless collaboration and change tracking.

CI/CD Pipelines: Automated deployment workflows ensured rapid, reliable software delivery.

Infrastructure as Code (IaC): AWS CloudFormation enabled consistent, version-controlled environment provisioning.

Technical Results:

– 85% faster deployments from days to minutes

– Zero downtime during deployments

– 90% reduction in human error through automation

– 100% infrastructure consistency with IaC

– Improved scalability during peak traffic

– Shift from maintenance to innovation for the dev team

Lessons Learned:

– Plan network architecture early to ensure security and scalability.

– Automate everything — from infrastructure to deployments.

– Use managed services to reduce complexity and operational overhead.

– Integrate monitoring and security from the start.

About the Partner: Cogniv Technologies

Cogniv Technologies is an AWS Advanced Tier Partner specializing in:

– Cloud-native architecture

– DevOps and CI/CD automation

– FinOps and cloud cost optimization

Their customer-first approach and deep AWS expertise made them the ideal partner for Sanika’s digital transformation.

Conclusion:

Pool Engineering successfully transitioned from a manually managed setup to a modern, scalable cloud platform. With AWS and Cogniv Technologies, they now deliver a seamless digital experience that matches the excellence of their in-person dining setting the stage

for future growth and innovation.